Contents: Neural Networks.

Week 4

Neural Networks

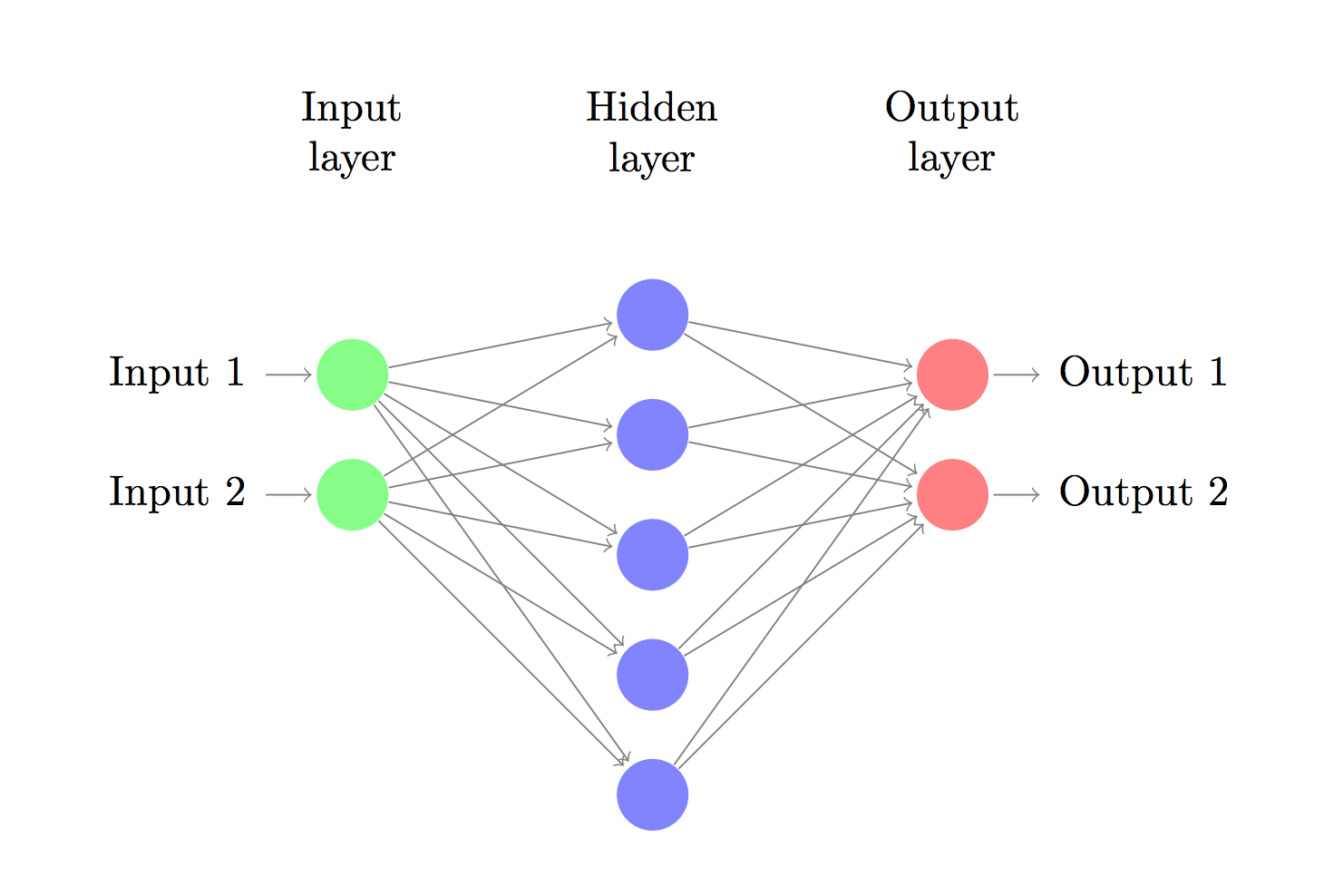

Neurons are computational units that take inputs (dendrites) as electrical inputs (called spikes) that are channeled to outputs (axons). There are also intermediate layers of nodes between the input and output layers called the hidden layers, and the nodes inside are named activation units.

If we had one hidden layer, it would look like:

Let’s say we have a neural metwork with 3 input nodes in layer 1, 3 activation nodes in layer 2, 1 output node in layer 3:

The values for each of the “activation” nodes is obtained as follows:

This is saying that we compute our activation nodes by using a 3×4 matrix of parameters. We apply each row of the parameters to our inputs to obtain the value for one activation node.

Our hypothesis output is the logistic function applied to the sum of the values of our activation nodes, which have been multiplied by yet another parameter matrix, containing the weights for our second layer of nodes:

The dimensions of these matrices of weights is determined as follows:

In other words the output nodes will not include the bias nodes while the inputs will.

Vectorized implementation

For layer j = h and node k, we define a new variable z as:

Therefore the formula in (#) can be rewritten as:

With more newly-defined variables:

We then are able to vectorized it further:

Next, we take a’s toward next layer:

Here, we multiply our matrix with dimensions (where is the number of our activation nodes) by our vector with height (n+1). This gives us our vector with height

Overall, the iteration goes like this, and this is also called:

Forward propagation

Notice that in the last step and all the assignation of a’s, we are doing exactly the same thing as we did in logistic regression. Adding all these intermediate layers in neural networks allows us to more elegantly produce interesting and more complex non-linear hypotheses.

Example

We can below that the sigmoid function is about 1 when x > 4 (vice versa), this property can help us to develop some example.

Let’s construct a nerual network:

and fixed the first theta matrix as:

This will cause the output of our hypothesis to only be positive if both inputs are 1. In other words:

Therefore we have constructed one of the fundamental operations in computers by using a small neural network rather than using an actual AND gate.

For examples with a hidden layer, please refers to the notes in Examples and Intuitions II

Multiclass Classification

This is done similarly to the one for logistic regression where we use the one-vs-all method. Saying we want to distinguish amongst a car, pedestrian, truck, or motorcycle, then instead of letting hypothesis equals to 1, 2, 3, 4 to correspond to each of them, we set:

So that we can construct a nerual network with 4 output nodes.

Week 5

Cost function

Our cost function for neural networks is going to be a generalization of the one we used for logistic regression:

We have added a few nested summations to account for our multiple output nodes. In the first part of the equation, before the square brackets, we have an additional nested summation that loops through the number of output nodes.

In the regularization part, after the square brackets, we must account for multiple theta matrices. The number of columns in our current theta matrix is equal to the number of nodes in our current layer (including the bias unit). The number of rows in our current theta matrix is equal to the number of nodes in the next layer (excluding the bias unit). As before with logistic regression, we square every term.

Note:

- the double sum simply adds up the logistic regression costs calculated for each cell in the output layer

- the triple sum simply adds up the squares of all the individual Θs in the entire network.

- the i in the triple sum does not refer to training example i

Back propagation

“Backpropagation” is neural-network terminology for minimizing our cost function, just like what we were doing with gradient descent in logistic and linear regression. Our goal is to compute:

That is, we want to minimize our cost function J using an optimal set of parameters in theta. In this section we’ll look at the equations we use to compute the partial derivative of J(Θ):

To do so, we use the Back propagation Algorithm:

After finishing the for loop, we update our new Δ matrix:

- if j ≠ 0.

- if j = 0.

The capital-delta matrix D is used as an “accumulator” to add up our values as we go along and eventually compute our partial derivative. Thus we get:

From above we can see Step 4 is doing the most important job, where the is actually called the “error” of (unit j in layer l). More formally, the delta values are actually the derivative of the cost function (binary classfication case):

Implementation Note: Unrolling Parameters

In order to use optimizing functions such as “fminunc()” when training the neural network, we will want to “unroll” all the elements and put them into one long vector:

Saying we have

1 | % In order to use optimizing functions such as "fminunc()", |

Gradient Checking

In some case the cost function converges even when the neural network is not learning in the right way. Gradient checking will assure that our backpropagation works as intended. We can approximate the derivative of our cost function with:

With multiple theta matrices, we can approximate the derivative with respect to as follows:

In matlab we can do it as follows:

1 | epsilon = 1e-4; |

A small value for ϵ (epsilon) such as ϵ=0.0001, guarantees that the math works out properly. If the value for ϵ is too small, we can end up with numerical problems.

We previously saw how to calculate the deltaVector. So once we compute our gradApprox vector, we can check that gradApprox ≈ deltaVector. Once you have verified once that your backpropagation algorithm is correct, you don’t need to compute gradApprox again. The code to compute gradApprox can be very slow.

Random Initialization

Initializing all theta weights to zero does not work with neural networks. When we backpropagate, all nodes will update to the same value repeatedly. Instead we can randomly initialize each to a random value between , then the above formula guarantees that we get the desired bound. In matlab, we can do:

1 | % If the dimensions of Theta1 is 10x11, Theta2 is 10x11 and Theta3 is 1x11. |

rand(x,y) is just a function in octave that will initialize a matrix of random real numbers between 0 and 1.

(Note: the epsilon used above is unrelated to the epsilon from Gradient Checking)

Putting it Together

First, pick a network architecture; choose the layout of your neural network, including how many hidden units in each layer and how many layers in total you want to have.

- Number of input units = dimension of features

- Number of output units = number of classes

- Number of hidden units per layer = usually more the better (must balance with cost of computation as it increases with more hidden units)

- Defaults: 1 hidden layer. If you have more than 1 hidden layer, then it is recommended that you have the same number of units in every hidden layer.

Training a Neural Network

- Randomly initialize the weights

- Implement forward propagation to get for any

- Implement the cost function

- Implement backpropagation to compute partial derivatives

- Use gradient checking to confirm that your backpropagation works. Then disable gradient checking.

- Use gradient descent or a built-in optimization function to minimize the cost function with the weights in theta.

Note that:

- Ideally, you want . This will minimize our cost function. However, keep in mind that J(Θ) is not convex and thus we can end up in a local minimum instead.

- When we perform forward and back propagation, we loop on every training example:

1 | for i = 1:m, |